How I created my own Twitter bot with deep learning

Celebrating my 12th Twitter anniversary, I created a bot to generate tweets that sound like mine. Here’s How I did it.

Last week was my 12th anniversary on Twitter. Instead of celebrating it with a tweet, I decided to do something better: my personalized Twitter bot.

The idea is to create a bot that writes tweets that make sense and sound like I wrote them myself. The first issue I faced was that most of my tweets were in Arabic, and most of the available Natural Language Processing (NLP) resources were in English. The second issue is that I write using the Egyptian Arabic dialect, which is different from the classic Arabic used in most books, news articles, and mainly used in Wikipedia. Those three things are the primary sources of datasets; thus most of the NLP datasets and models are in classic Arabic.

Tools

I used the Hugginface transformers Python library to build the model. It provides thousands of pre-trained models for different tasks, including text generation. To test and run my code, I used Google Colab and used their GPUs.

The base model

The base model I used was an Arabic GPT-2 model from the Huggingface model hub. The model was trained using a classic Arabic Wikipedia dump. The model can be imported and initialized as follows:

from transformers import AutoTokenizer

from transformers import Trainer, TrainingArguments,AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("akhooli/gpt2-small-arabic")

model = AutoModelWithLMHead.from_pretrained("akhooli/gpt2-small-arabic")

Now, we have a tokenizer to process Arabic text and a model to generate new text based on a context text (history). Let’s call this model the classic Arabic model. To run the model and generate text, we need to create a pipeline.

from transformers import pipeline

classic_ar_bot = pipeline('text-generation',model=model, tokenizer=tokenizer,config={'max_length':60})

if we run the pipeline to generate some tweets, it won’t sound like me, unless I copy my tweets from Wikipedia in classic Arabic. For example, if I tried to use the model to complete a sentence that starts with “I don’t know” in classic Arabic:

classic_ar_bot('انا لا أعلم')

The model outputs the following:

لا أعلم، حتى إذا كان من المقرر أن تكون هذه المسألة غير قابلة للنقاش، إلا أن أحد الجانبين قال إنه لا يوجد سوى دليل كاف

The output is a meaningful sentence in classic Arabic, and it seems like a part of a story or an article. It translates to: “I don’t know, even if this was to be a non-negotiable issue, but one side said there was only enough evidence.” If we test the model with an Egyptian Arabic sentence, it would ignore the Egyptian Arabic text or consider it a name or random text. For example, if we tested the model with a sentence in Egyptian Arabic with the same meaning.

cls_ar_bot('انا مش عارف')

The model output is:

انا مش عارف. كانت آخر بطولة دوري بطولة في تاريخها كانت في عام 1992 ضد أندية كاغاريغا ومورايغو، وفازت على مانشستر يونايتد في كأس العالم للأندية

The mode generates a random sentence about sports that has nothing to do with “I don’t know” input phrase. This shows that the model doesn’t “understand” Egyptian Arabic.

Model fine-tuning with Egyptian Arabic data

I decided to fine-tune the model using a text dataset in Egyptian Arabic.

Dataset

The dataset used is Corpus on Arabic Egyptian tweets , which consists of 40,000 pre-processed tweets written in Egyptian Arabic. The tweets have sentiment annotations (positive and negative), but this is not in the scope of this task.

Rania Kora; Ammar Mohammed, 2019, “Corpus on Arabic Egyptian tweets”, https://doi.org/10.7910/DVN/LBXV9O, Harvard Dataverse, V1

Fine-tuning

First, I import the needed dependencies

import pandas as pd

import json

import re

from sklearn.model_selection import train_test_split

Then, I convert the tweets TSV into two datasets stored in text files.

#save dataset as text file

def build_text_files(data, dest_path):

f = open(dest_path, 'w')

f.write(" ".join(data))

#load dataset into Pandas Dataframe

data_df = pd.read_csv('40000-Egyptian-tweets.tsv', sep='\t', header=0)

#split dataset into training and validation

train, val = train_test_split(data_df["review"].tolist(),test_size=0.20)

#create the textfiles

build_text_files(train,'train_dataset.txt')

build_text_files(val,'val_dataset.txt')

I split the dataset into 80% training, and 20% validation, and now load them into a Huggingface dataset object.

from transformers import TextDataset,DataCollatorForLanguageModeling

def load_dataset(train_path,test_path,tokenizer):

train_dataset = TextDataset(

tokenizer=tokenizer,

file_path=train_path,

block_size=128)

val_dataset = TextDataset(

tokenizer=tokenizer,

file_path=val_path,

block_size=128)

data_collator = DataCollatorForLanguageModeling(

tokenizer=tokenizer, mlm=False,

)

return train_dataset,val_dataset,data_collator

train_path = 'train_dataset.txt'

val_path = 'val_dataset.txt'

train_dataset, val_dataset,data_collator = load_dataset(train_path,test_path,tokenizer)

Now, the dataset is ready to be used to fine-tune the model. I trained the model using the following parameters:

training_args = TrainingArguments(

output_dir="/content/model_40k", #The output directory

overwrite_output_dir=False, #overwrite the content of the output directory

num_train_epochs=7, # number of training epochs

per_device_train_batch_size=16, # batch size for training

per_device_eval_batch_size=32, # batch size for evaluation

eval_steps = 100, # Number of update steps between two evaluations.

logging_steps = 100,

save_steps=800, # after # steps model is saved

warmup_steps=100,# number of warmup steps for learning rate scheduler

prediction_loss_only=True,

evaluation_strategy='steps',

learning_rate=5e-5,

weight_decay=0.01

)

trainer = Trainer(

model=model,

args=training_args,

data_collator=data_collator,

train_dataset=train_dataset,

eval_dataset=val_dataset,

)

trainer.train()

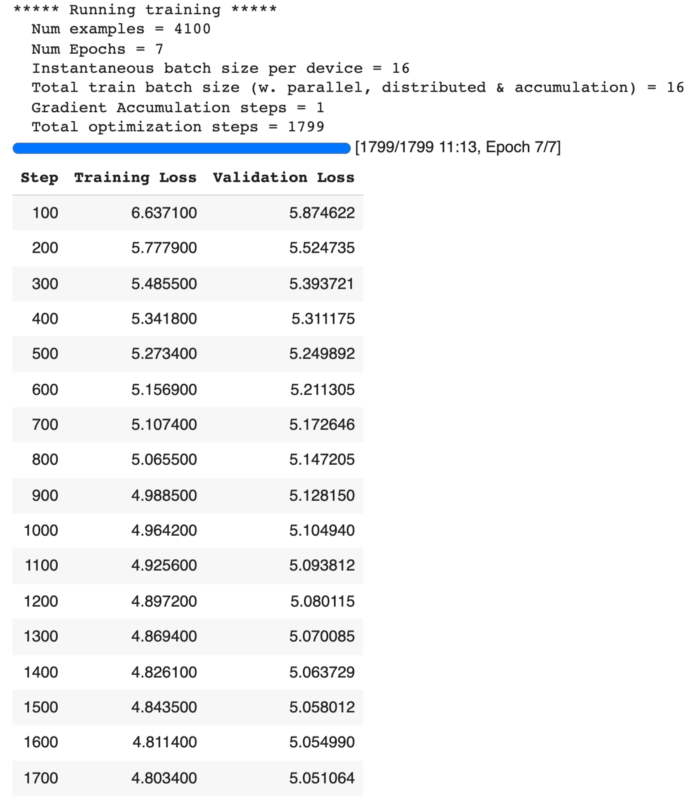

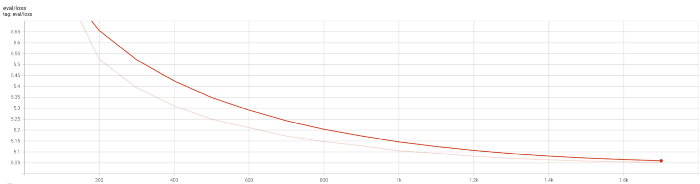

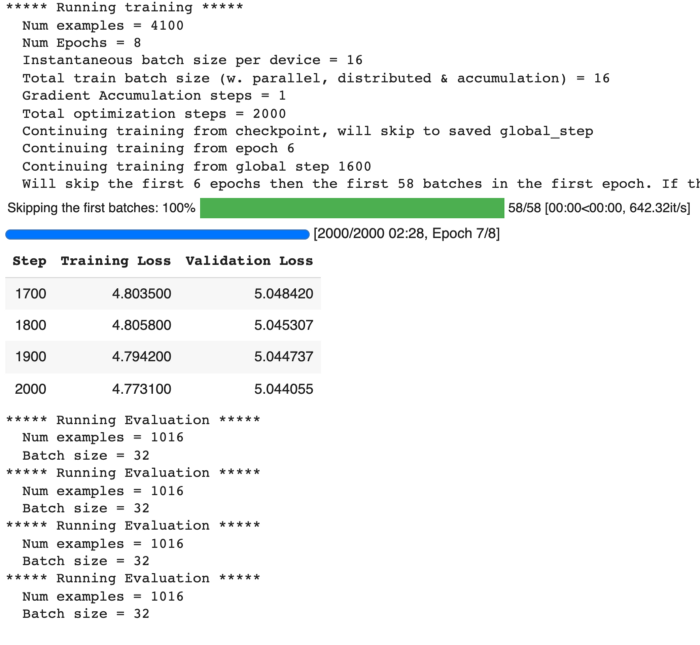

I trained the model for 7 epochs, after which the validation loss starts to slowly decrease (saturate).

Model training (Fine-tuning)

Model eval loss

Now, let’s test the new fine-tuned model:

model = AutoModelWithLMHead.from_pretrained("/content/model_40k")

egy_ar_bot = pipeline('text-generation',model=model, tokenizer=tokenizer,config={'max_length':60})

Testing the model wit the same sentence in Egyptin Arabic that translates into “I don’t know”:

egy_ar_bot('انا مش عارف')

The output is:

‘انا مش عارف ايه اللى انا انا فى كل حاجة هخفي حد ده يا اخى. انا بعشق امتحانات اكثر امتحان’

which translates into: ‘I do not know what I am in everything, I will hide this limit, my brother. I love exams more exams’ The model now can generate Egyptian Arabic sentences, but one thing is missing. The model still does not sound like me because I don’t love exams.

Personalizing the bot

Download Twitter data

I decided to fine-tune the model once again using my own Twitter data to make it sounds more like me. First, I requested to get my data, and I had to wait for one day to be able to download the data from Twitter. This option is for anyone, and there is no need to a developer account.

Requesting the data from Twitter settings

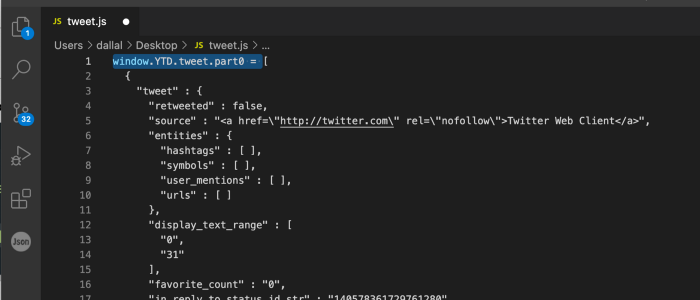

After downloading the data, the file needed to the tweets is called “tweet.js”, and it’s located in the “data” folder. Next, I copy the text after the bracket “[“ in the first line into a JSON file and name it “tweet.json”

Then, I load the data

f = open('tweet.json').read() #read JSON file

data = json.loads(f) #load file content into list of Python dictonaries

texts = []

for tweet in data:

text = "".join(re.findall(r'[\u0600-\u06FF]| ', tweet['tweet']['full_text'],

re.UNICODE)) #only store Arabic text

if len(text)>3: #igonre tweets with length less than 3 characters

texts.append(' '.join(text.split()))

Now, I can convert the data into a dataset to be used with Huggingface using the following code:

train, test = train_test_split(texts,test_size=0.1)

build_text_files(train,'train_dataset.txt')

build_text_files(test,'test_dataset.txt')

The model can be fine-tuned using the new dataset as follows:

training_args = TrainingArguments(

output_dir="/content/personal", #The output directory

overwrite_output_dir=False, #overwrite the content of the output directory

max_steps=2000, # maximum number of steps

per_device_train_batch_size=16, # batch size for training

per_device_eval_batch_size=32, # batch size for evaluation

eval_steps = 100, # Number of update steps between two evaluations.

logging_steps = 100,

save_steps=800, # after # steps model is saved

warmup_steps=100,# number of warmup steps for learning rate scheduler

prediction_loss_only=True,

evaluation_strategy='steps',

learning_rate=5e-5,

weight_decay=0.01

)

trainer = Trainer(

model=model,

args=training_args,

data_collator=data_collator,

train_dataset=train_dataset,

eval_dataset=val_dataset,

)

This time I set the maximum number of steps to 2000, since I will use the fine-tuned model checkpoint after 1600 steps.

The model is trained for two epochs:

Model Training

Now, the model can generate the following sentence:

“انا مش عارف ايه . أنا من اللي بجد من الهم لما حد يتاكدك يا عم. ان شاء الله هو خير له.”

The sentence has phrases that I normally use. The phrases make sense, but the full sentence seems as if it’s taken out of context. Still, the model sounds like me!

Conclusion

It took me a few hours to celebrate my Twitter annversiry by creating a personlized text generation bot. The key to train such models is the availabilty of the datasets, since the pre-trained models made the process of creating such systems much easier.